Numerous studies have explored navigating humanoid robots in relatively stable environments, where obstacles are static and sparsely distributed. Some cutting-edge approaches have begun incorporating moving obstacles alongside static barriers. However, these dynamic obstacles are sparsely distributed around the bipedal robot, simplifying the formulation of navigation problem in crowded environments. Additionally, the disturbances are typically assumed to be simplistic — such as obstacles moving slower than the robot and the absence of social interactions between humans and the robot. Moreover, humanoid robots are assumed to locomote on flat grounds, unable to fully exploit the terrain adaptability of bipedal robots. These simplifications and assumptions present significant challenges for enabling bipedal robots to navigate in densely populated, human-rich environments with uneven terrains. Our study addresses these limitations by focusing on more realistic and socially interactive environments, where dynamic pedestrians and static obstacles are densely distributed around the bipedal robots. Moreover, we are simultaneously developing a terrain-aware social navigation framework, enabling humanoid robots to traverse through rough terrains while safely interacting pedestrians.

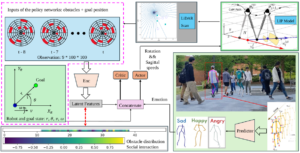

This study presents a deep reinforcement learning (DRL) based navigation framework for humanoid robots in socially interactive environments with an emotion-aware and terrain-aware manner. We represent dynamic environments with sequential LiDAR grid maps, from which we can comprehensively extract latent features including implicit collision areas and complicated social interactions. Additionally, we further incorporate human emotions into our navigation structure to augment socially aware navigation in human societies. At the meantime, we leverage an end-to-end DRL architecture to directly project the latent features and human emotions into the actions of bipedal robots, enabling an emotion-aware and collision-avoidance locomotion in social contexts. Simultaneously, we are going to integrate a terrain-aware mechanism to fully exploit the advantage of humanoid robots on rough terrains.

Research challenge: How to simulate interactive human motions on rough terrains? Humans should interact with each other with some social rules. In the meanwhile, they ought to consider and adapt to the humanoid robot’s locomotion. How to estimate human emotions and design emotion-related states and rewards for our DRL pipeline? How to create a terrain aware locomotion controller that enables the humanoid robot to stably traverse through challenging terrains, such as stairs, grass grounds, and construction areas. How to simultaneously comply with social rules while navigating through rough terrains?

Publication:

Wei Zhu, Abirath Raju, Abdulaziz Shamsah, Anqi Wu, Seth Hutchinson, and Ye Zhao. EmoBipedNav: Emotion-aware Social Navigation for Bipedal Robots with Deep Reinforcement Learning. Submitted, 2025. [pdf] [video] [website]