This research direction focuses on formal methods and decision-making algorithms of dynamic terrestrial locomotion and aerial manipulation in complex and human-surrounded environments. We aim at scalable planning and decision algorithms enabling heterogeneous robot teammates to dynamically interact with unstructured environments and collaborate with humans.

Read more...

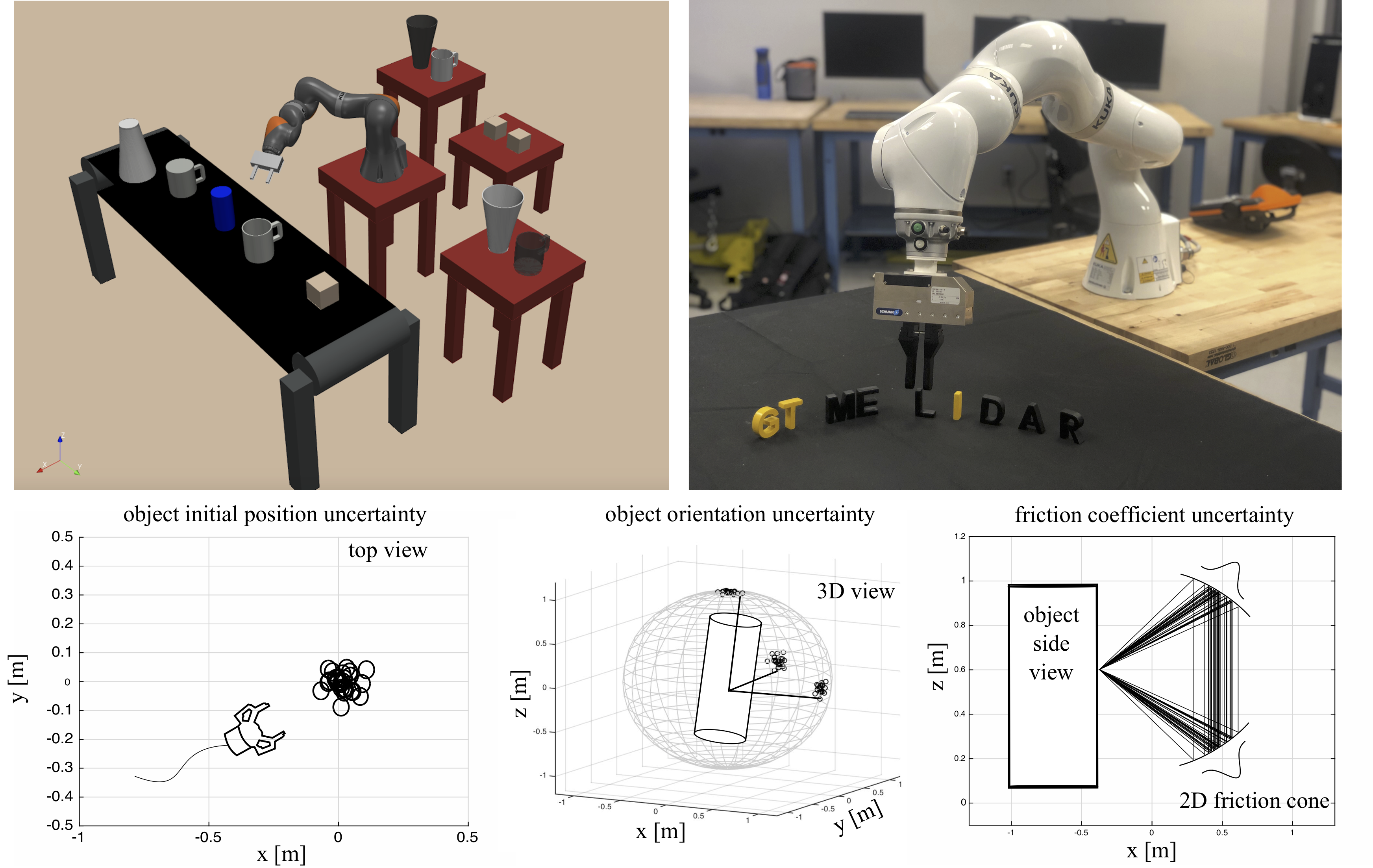

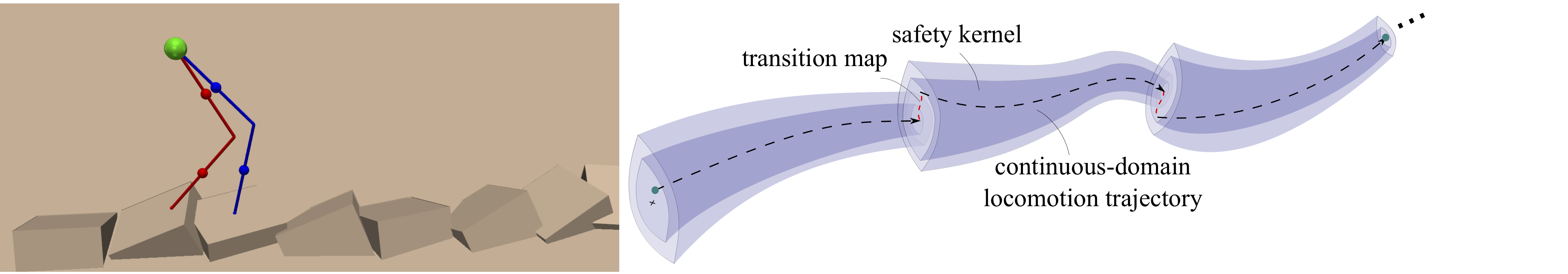

Trajectory optimization through contact is a powerful set of algorithms for designing dynamic robot motions involving physical interaction with the environment. However, the trajectories output by these algorithms can be difficult to execute in practice due to several common sources of uncertainty: robot model errors, external disturbances, and imperfect surface geometry and friction estimates.

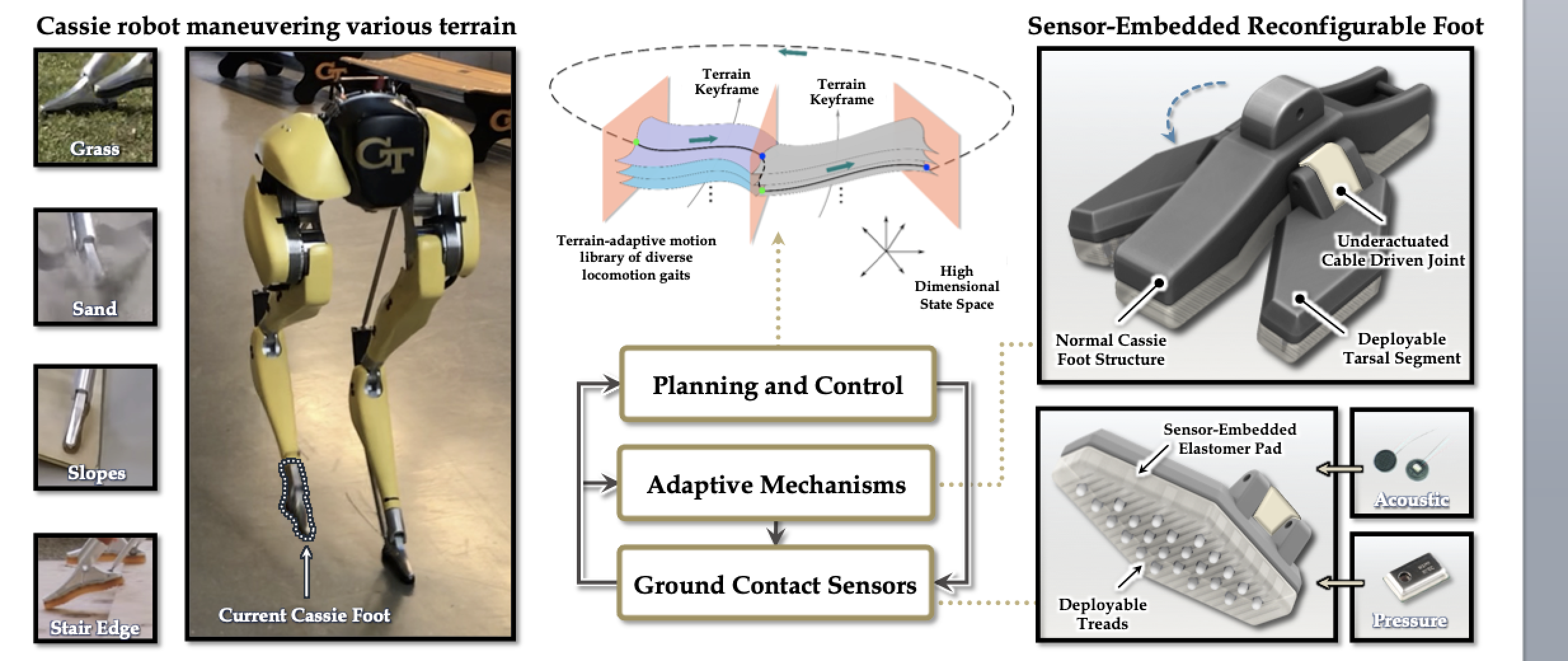

The objective of this research project is to design closed-loop perception, navigation, task and motion planners for dynamic-legged robots to autonomously navigate complex indoor environments while accomplishing specific tasks such as office disinfection.

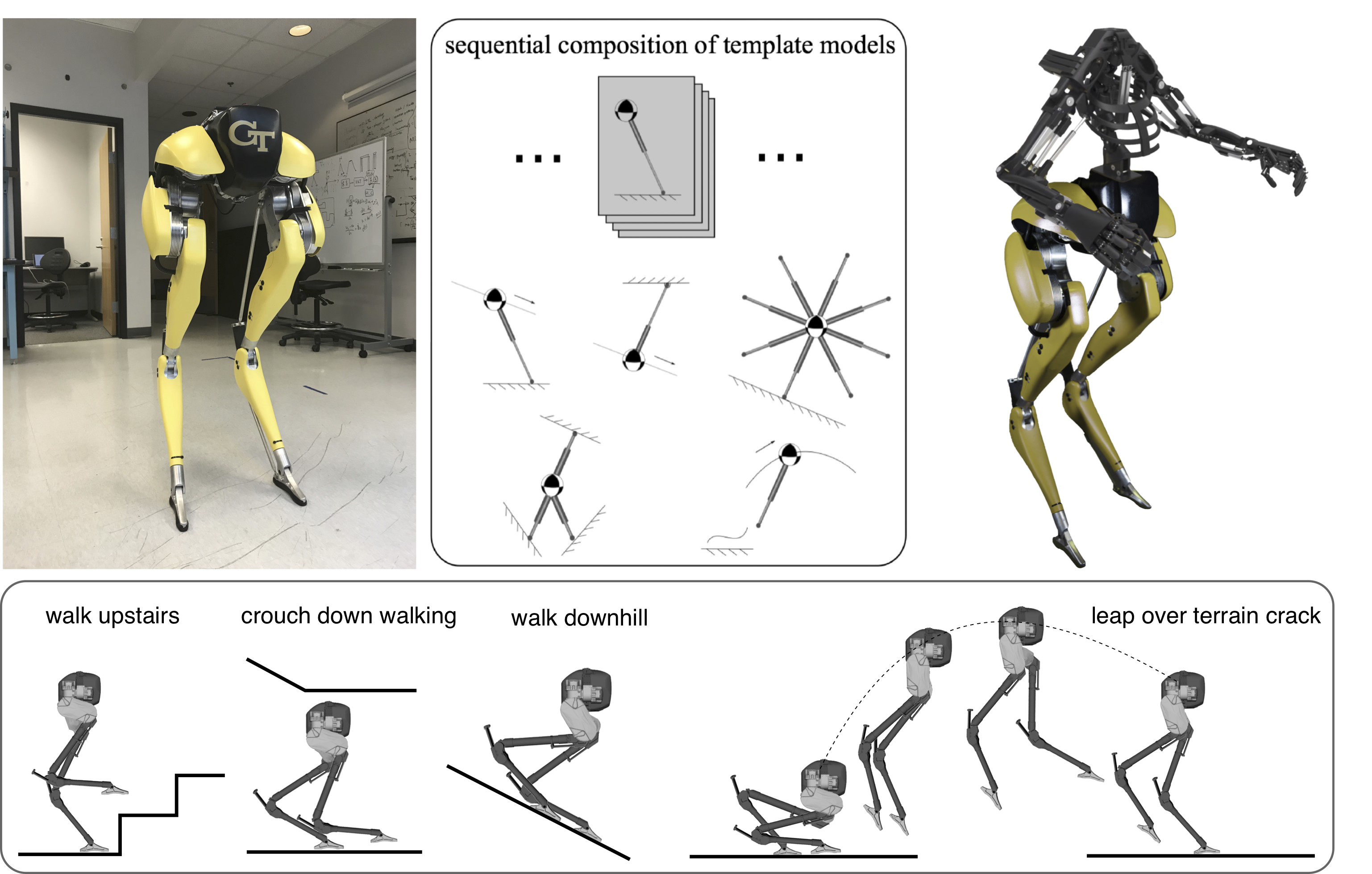

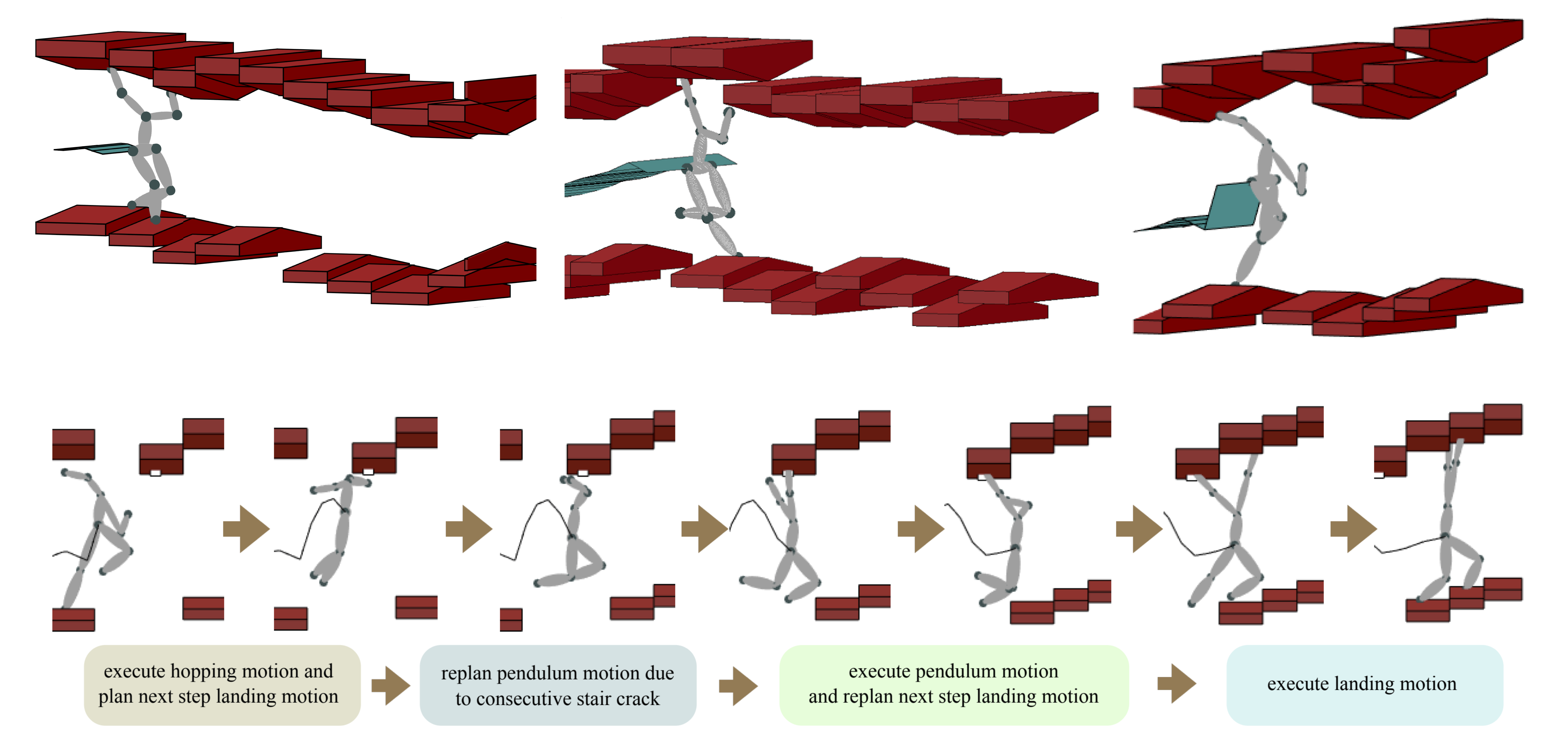

This project takes a step toward formally synthesizing high-level reactive planners for unified legged and armed locomotion in constrained environments. We formulate a two-player temporal logic game between the contact planner and its possibly adversarial environment.

Locomotion over cluttered outdoor environments requires the contact foot to be aware of terrain geometries, stiffness, and granular media properties. Although current internal and external mechanical and visual sensors have enabled high-performance state estimation for legged locomotion, rich ground contact sensing capability is still a bottleneck to improved control in austere conditions.