Quadrupedal robot platforms have seen a boom in agile, highly dynamic low-level controllers that allow for complex behaviors including parkour, crouching, and jumping. However, there is still a lack of motion planners capable of commanding such maneuvers. Our research focuses on how we can develop accompanying motion planners and navigation frameworks that allow quadrupeds to be autonomously deployed in unknown environments. We develop semantic environment representations catered to legged morphologies to aid in understanding when and where to step, fast egocentric motion planners that can handle dynamic environments, and robust whole-body controllers that allow these robots to track desired trajectories while reacting to disturbances.

Figure 1. Our lab’s Unitree Go2 outfitted for navigation via an Intel Realsense D435 depth camera and T265 tracking camera.

Figure 2. Example torso paths generated from the Bezier Gap planner for the lab’s Unitree A1 [1].

Perception:

In order to fully exploit the capabilities of a quadrupedal platform, the environment representation for its navigation framework must appropriately capture the affordances of the system. That is, if the quadruped can step over debris or duck underneath overhanging obstacles, the environment representation should reflect such possibilities. To do so, we devise a set of semantic labels [2] that capture if a part of the environment can be stepped onto (“steppable”), stepped over (“passable”), or maneuvered around (“non-passable”) by a quadrupedal system. We generate training data through a primitive shapes-based approach to synthetic scene generation and use the trained model to inform a foothold planner for online navigation.

Fig 3. Example input depth images, output steppability masks, and ground truth training labels for the semantic steppability prediction task.

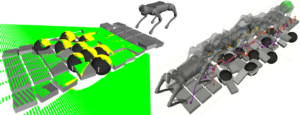

Fig 4. (Left) Overlaid steppability labels on stepping stones environment, (Right) Example collision-free trajectory for a steppability-informed foothold planner.

Planning:

Torso-level motion planning: We employ a family of gap-based planners for torso-level motion planning. These planners operate on egocentric raw sensor data to perform planning in the perception space. This is a computationally efficient planning pipeline which bypasses all perception data pre-processing and casts torso-level planning as a series of robot-centric decision processes. We have extended this family of planners to quadrupedal geometric and kinematic constraints [1] along with dynamic environments [3]. Our next step is to fuse these two threads and enable quadrupedal plans to be generated in unknown, changing environments.

Fig 5. Example “gaps” of free space that are detected, propagated, and planned through using the dynamic gap planner.

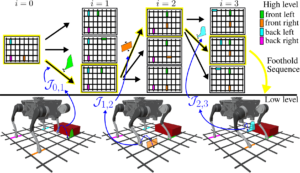

Fig 6. Submission video for the dynamic gap project.

Foothold level planning: We handle the hybrid planning problem of legged locomotion through a hierarchical approach which caters a different planning paradigm to the discrete and continuous portions of the planning space. We perform a graph search over contact mode families in the local environment to generate candidate footholds, and we then run a series of trajectory optimization subproblems to refine these footholds and generate whole body trajectories that transition between contact stances. An offline experience-based trajectory and cost library serves to coordinate across the two planning layers and leverage past planning attempts to expedite future ones.

Fig 7. Diagram for the two planning layers of our multi-modal planning framework [4].

Fig 8. Submission video for our multi-modal motion planning project.

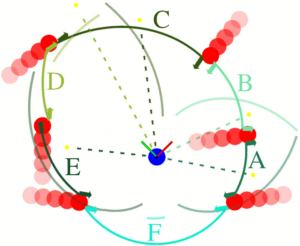

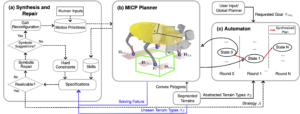

Gait-level planning: We integrate reactive synthesis and Mixed-Integer Convex Program (MICP) [5] to leverage the strength of both approaches for gait-level planning to adapt to various terrain types. Specifically, we employ reactive synthesis to provide formal safety guarantees at the abstraction level and MICP to ensure footstep planning with physical feasibility for each symbolic transition. To handle unrealizable specifications due to limited feasible symbolic transitions, we leverage a symbolic repair process to identify dynamically feasible missing skills in both offline and online phases. During the online execution phase, we re-run the MICP using real-world terrain data to bridge the gap between offline synthesis and online execution, along with online symbolic repair to handle unforeseen terrains.

Fig 9. System overview for offline synthesis and online execution. During the offline synthesis (black dashed arrows), an initial set of motion primitives is given and symbolic skills are iteratively generated by solving MICP. When the task specifications are unrealizable, a symbolic repair is triggered to seek missing skills. During the online execution (black solid arrows), MICP is solved again taking online terrain segments and the symbolic state only advances when a solution is found. Online repair is triggered (blue solid arrow) when a solving failure or an unseen terrain is detected.

Representative publications

Ziyi Zhou, Qian Meng, Hadas Kress-Gazit, Ye Zhao. Physically-Feasible Reactive Synthesis for Terrain-Adaptive Locomotion. Submitted, 2025. [paper link]

Ziyi Zhou, Qian Meng, Hadas Kress-Gazit, Ye Zhao. Physically-Feasible Reactive Synthesis for Terrain-Adaptive Locomotion via Trajectory Optimization and Symbolic Repair. 2025 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2025.

Shiyu Feng, Ziyi Zhou, Justin S. Smith, Max Asselmeier, Ye Zhao, and Patricio A. Vela. GPF-BG: A Hierarchical Vision-Based Planning Framework for Safe Quadrupedal Navigation. IEEE International Conference on Robotics and Automation (ICRA). 2023.

Max Asselmeier, Ye Zhao, and Patricio A. Vela. Steppability-informed Quadrupedal Contact Planning through Deep Visual Search Heuristics. Submitted, 2024.

Max Asselmeier, Dhruv Ahuja, Abdel Zaro, Ahmad Abuaish, Ye Zhao, and Patricio A. Vela. Dynamic Gap: Safe Gap-based Navigation in Dynamic Environments. Submitted, 2024.

Max Asselmeier, Jane Ivanova, Ziyi Zhou, Patricio A. Vela, and Ye Zhao. Hierarchical Experience-informed Navigation for Multi-modal Quadrupedal Rebar Grid Traversal. IEEE International Conference on Robotics and Automation (ICRA), 2024.

Ziyi Zhou, Eohan George, and Ye Zhao. Bridge Mixed-Integer Convex Program and Linear Temporal Logic: Reactive Gait Synthesis and Footstep Planning for Agile Perceptive Locomotion. IROS Workshop on Formal Methods Techniques in Robotics Systems: Design and Control. 2023.