Humanoid robots are designed to perform complex loco-manipulation tasks in human-centered environments. However, achieving robust and dynamic performance in tasks like stair climbing, object manipulation, and agile locomotion is challenging due to the high-dimensional, underactuated dynamics and contact-rich interactions inherent to these robots. This research introduces Opt2Skill, a pipeline that integrates model-based trajectory optimization with reinforcement learning (RL) to enable versatile whole-body humanoid robot loco-manipulation.

Research Goals

1. Dynamic Feasibility and Contact-Rich Interaction: Leveraging dynamically feasible trajectories to address multi-contact transitions, force distribution, and object manipulation in diverse environments.

2. Robust Motion Imitation: Using high-fidelity, model-based data to guide RL for natural, stable, and efficient motion.

3. Sim-to-Real Transfer: Bridging the gap between simulation and real-world performance through domain randomization and robust RL policies.

Methodology

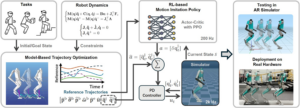

1. Trajectory Optimization (TO): Using Differential Dynamic Programming (DDP) implemented in Crocoddyl to generate optimal trajectories. These trajectories include dynamics, torque limits, and friction constraints, ensuring feasibility and adaptability.

2. Reinforcement Learning: Training RL policies to track these optimal trajectories in simulation (MuJoCo) using Proximal Policy Optimization (PPO). The policies augment reference joint trajectories with residual terms to improve real-world adaptability.

3. Sim-to-Real Transfer: Incorporating domain randomization to train policies that generalize across diverse conditions, enabling zero-shot deployment on hardware.

Overall structure of the Opt2Skill framework. Optimal reference trajectories are generated based on the task goal and robot dynamics model. The RL policy augments the reference trajectories with a residual term, which is applied to a low-level PD controller for torque control. Training includes domain randomization, and the trained policy is able to transfer from the simulation to the real robot.

Research Highlights

Versatile Task Performance: Opt2Skill achieves dynamic locomotion (e.g., walking on rough terrain and stairs), robust object manipulation, and multi-contact tasks like desk-reaching and box-picking.

Data Efficiency: By imitating high-quality model-based trajectories, Opt2Skill reduces training time and improves RL policy performance compared to pure RL approaches training from scratch.

Real-World Validation: Successfully demonstrated on the Digit humanoid robot, achieving whole body loco-manipulation tasks in both controlled and unstructured environments.

Research Challenges

Optimal Data Generation: How can we ensure the quality and diversity of reference trajectories across varied loco-manipulation tasks to maximize RL performance and adaptability?

Scalability: How can we generalize the approach to handle increasingly complex environments and task variations, enabling humanoid robots to perform a broader range of loco-manipulation tasks?

Sim-to-Real: How can we balance accurate dynamics modeling with robust policy design to bridge the gap between simulation and real-world performance, ensuring policies generalize well across diverse environments and physical discrepancies?

Opt2Skill demonstrates the power of combining model-based optimization with RL to tackle some of the toughest challenges in humanoid robot control, enabling robots to achieve human-like versatility and efficiency in complex environments. For more details, visit our project website.

Representative publications:

Fukang Liu, Zhaoyuan Gu, Yilin Cai, Ziyi Zhou, Hyunyoung Jung, Jaehwi Jang, Shijie Zhao, Sehoon Ha, Yue Chen, Danfei Xu, and Ye Zhao. Opt2Skill: Imitating Dynamically-feasible Whole-Body Trajectories for Versatile Humanoid Loco-Manipulation, IEEE Robotics and Automation Letters, 2025. [project website]