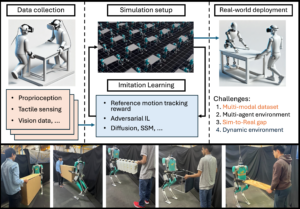

The goal of this research is to develop a whole-body control method for humanoid robots to perform collaborative transportation tasks with humans. While most recent developments in real-world humanoid control have focused on single-agent motion in open environments, our work extends these capabilities to support dynamic, responsive teamwork in complex, interactive environments. The complexity of human behavior and the need for accurate, adaptive control in contact-rich environments complicates the challenge. Our approach combines imitation learning with social skill learning to enable robots to effectively coordinate with humans. We propose a simulated environment that can be used to test our approach, while developing the Sim2Real framework for transfer to a real-world collaborative task.

Research Challenges: To achieve a human-like collaborative transportation experience, this research is organized around several challenges. How can we develop and use a multimodal dataset that integrates and synchronizes multiple data streams such as vision, force/torque, and proprioception to accurately model human intention? How can we design a robust multi-agent training algorithm that supports human-robot collaboration, including dynamic interactions and environmental factors? How can we bridge the gap between simulation and real-world applications to ensure that robots can operate effectively in spite of partial observations, sensor limitations, and a limited number of demonstrations?

Representative publications:

SEEC: Stable End-Effector Control with Model-Enhanced Residual Learning for Humanoid Loco-Manipulation Manuscript under review Jaehwi Jang, Zhuoheng Wang, Ziyi Zhou, Feiyang Wu, Ye Zhao. Submitted, 2025. [paper link]